Azure Blob Storage Lifecycle Management – Another cost optimization ‘must have’ skill for the Azure Admins

For the last few days there were speculations about my 100th post in my blog, since I have not posted for almost two weeks now, few of my close friends and followers also have asked me about my next post as well. Bringing to an end to the speculation, today I am publishing my 100th post and in this post I am going to write in detail about the Azure Blob Lifecycle Management and why it has became an impotant skills for the Azure Techies.

Fig: Picture credit to Royalty Free Photos from Pexels.com

Why have I chosen this topic as my 100th post?

In today’s world data stored in the cloud is growing at an exponential rate due to the lower cost and availability. Most of the enterprises are moving their data to the cloud. More data in the cloud means more cost is involved and it will be one of the primary responsibility of the Azure Architects and Administrator to control the storage cost and this is a kind of grey area where technologies are evolving and nothing much has been written so far.

What is the typical storage cost in a typical Enterprise Level Azure Infrastructure?

In a typical Azure IaaS driven enterprise, the storage cost is around 25-30% of the overall Azure cost and this is an area where there is a lot of scope for the optimization and proper planning is required. Generally, storage cost is the 2nd cost driver just after the compute cost.

Why it’s important to fully understand the storage Life Cycle Management?

Nowadays in many of the organization storage is already all about the cloud, no one is anymore purchasing the costly storage devices, for the last decade we have witnessed massive layoff and cost-cutting in the storage companies and many acquisition and mergers for their survival.

Since data is moving into the cloud very fast it’s also important to manage the costs for the growing storage needs in the cloud. The data in the cloud can be maintained if you plan the data storage based on some parameters like the frequency of access and retention period. The data stored in the cloud can be different in terms of how it is generated, processed, and accessed over its lifetime. Some data is actively accessed and modified throughout its lifetime. Some data is accessed frequently early in its lifetime, with access dropping drastically as the data ages. Some data remains idle in the cloud and is rarely if ever, accessed once stored. There is no particular role of storage admins in the cloud. As Azure administrators its important that you should understand all about this and plan with the application teams in advance to take the advantage of huge pricing difference in storage tiers. If you really able to plan well, you can easily save 30 to 40% of the storage cost in the year and showcase the KPI to the management. This is an area which will give you a high visibility in the upper management if you able to accomplish it in a better cost-effective way.

Also, Azure Storage Lifecycle management feature is free of charge in the preview, so you don’t need to buy anything extra license for this.

What to do once you understand the data access patterns of each application?

Once you understand each application data access scenarios you can benefit from different storage tier to fit the data based on the access patterns. Azure Blob storage addresses this need for differentiated storage tiers with separate pricing models.

Azure Blob Storage Life Cycle Management

Azure Blob Storage Lifecycle management, is a preview feature and it’s a really interesting feature which I think should be discussed in detail. To understand this in detail you should first know about the Azure Blob Level Tiering and before that few words on the types of the enterprise data is also important.

What are the types of data?

There are generally three types of the data which is present in an enterprise storage Architecture. They are as follows:

Hot Data: The data which is accessed very frequently. Azure has the hot storage tier which is generally used for this purpose. By default when you provision the storage everything is in hot storage tier in Azure.

Cold Data: This is the data which is infrequently accessed. Azure also has the cold storage tier which is generally used for this purpose. This is good for the data which is stored for at least 30 days.

Archive Data: This is the data which is rarely accessed. Azure has recently added the Archive Tier. The Azure archive storage tier is optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements (on the order of hours). The archive storage tier is only available at the blob level and not at the storage account level.

What is Azure Blob Level Tiering?

Azure Blob Level Tiering is the feature which helps in the transition of blobs between Hot-Cold-Archive tiers.

What is Azure Blob Storage Lifecycle Management?

It offers a rule-based policy which you can use to transition your data to the best access tier and to expire data at the end of its lifecycle.

Lifecycle management policy helps you:

- Transition blobs to a cooler storage tier (Hot to Cool, Hot to Archive, or Cool to Archive) to optimize for performance and cost

- Delete blobs at the end of their lifecycles

- Define rules to be executed once a day at the storage account level (it supports both GPv2 and Blob storage accounts)

- Apply rules to containers or a subset of blobs (using prefixes as filters)

What version of the storage account helps in Blob Level Tiering?

It’s only available with

Pricing comparison of the storage tiers

Let’s see what’s it cost for 1 TB blob storage data in US East region

| Block Blob | Hot(Price in USD) | Cold(Price in USD) | Archive(Price in USD) | Region |

| Size (1 TB) |

20.8 |

15.2 |

2 |

East US |

| Percentage Savings |

0% |

24% |

90.40% |

As you can see above if you change the storage tier, you will definitely get a very good advantage on cost. The cold tier is almost 24% cheaper than the hot storage and Archive storage is 90.4% cheaper than the hot storage. So with the above calculation, I think you can easily understand the cost-benefit you will get if you can identify and earmark the data for the storage tiring.

How to get started with Azure Blob Life Cycle Mangement?

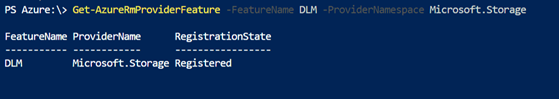

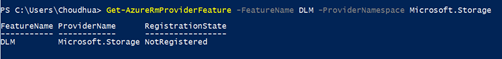

Now let’s see how you can enable the storage data lifecycle management feature in your subscription, without that you can’t proceed much. To enable that please add the RM provider feature of the DLM with the PowerShell script as you can see below.

After a day when I have checked, I have seen the DLM feature is automatically activated in my subscription.

After few days of use, I have also received a mail from the Microsoft representative asking my feedback on the Blob lifecycle management J

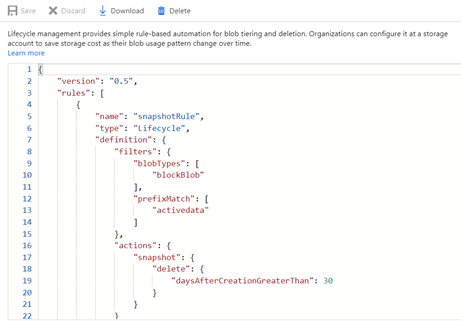

Now let’s see how to test the Azure Life Cycle Policy, In this example, you wanted to make sure that all the snapshot of the storage account should be deleted after a specific period of time.

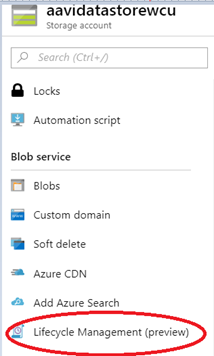

First, you need to go to the Azure Storage Life Cycle Management

In the right hand, side pan add the code.

In next step save the lifecycle policy.

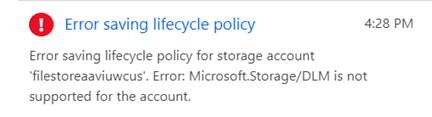

In case DLM feature is not registered you can see the below error while trying to storage life cycle policy.

For more details for the Azure Life Cycle Management examples please visit this URL here. Please note that in order to use the DLM feature you need to enable it in every subscription.

That’s all about today. I hope you have liked this read. Thanks and you have a great day ahead.

Hi,

Is it still in preview? can we use blobTypes”: [

“PageBlob”, I want delete files from page Blobs but its not accepting this value while creating this rule.

Thank you,

this is great