Step by step instructions to deploy the high GPU VM’s in AVD (Azure Virtual Desktop)

In the real VDI world sometimes higher graphics performance makes a lot of sense for thousands of users who need them as the use of graphics is increasing in many industries every day.

These design decision for the need for a GPU is subject to the user-base requirements (graphics Applications) due to cost.

In the design for on-premises deployment if this part is sold to the customer by the pre-sales team it will be generally considered an important cost driver in the deal. However, during the implementation time if it’s included in the deal/design it may become a nightmare for the implementation team as it requires a lot of effort to create and configure the GPU infra correctly. I have significantly deployed High GPU infra in multiple On-Premises Citrix VDI environments and am currently deployed a few in Azure so I am in the best position to inform you about the high GPU VM.

Picture Credit: Pexel.com

For the users with higher performance needs, a graphic card acceleration is required, and they significantly improve user experience and reduce server processor load.

Certain limitations and additional components must be accounted for when deploying this feature on-premises these are the requirements.

- You have a server platform that can host your chosen hypervisor and NVIDIA GPUs that support NVIDIA vGPU software. For a list of validated server platforms, refer to NVIDIA GRID Certified Servers.

- One or more NVIDIA GPUs that support NVIDIA vGPU software are installed in your server platform.

- A supported virtualization software stack is installed according to the instructions in the software vendor’s documentation.

- A virtual machine (VM) running a supported Windows guest operating system (OS) is configured in your chosen hypervisor.

- A license server for Nvidia is required with primary and secondary nodes for high availability

- Certain features and performance depend on the endpoint device.

For example the supported use cases for Citrix XenServer is as follows:

Citrix Hypervisor Support

| Driver Package | Hypervisor or Bare-Metal OS | Software Product Deployment | Hardware Supported | Guest OS Support |

|---|---|---|---|---|

| NVIDIA vGPU for XenServer 8.25 | Citrix Hypervisor 8.2 |

|

|

|

|

||||

|

||||

| NVIDIA vGPU for XenServer 7.15 | Citrix XenServer 7.1 |

|

|

|

|

||||

|

This entire process can take a very long time to deploy depending on logistics and hardware procurement.

If you compare this with an On-premises solution one key benefit of Azure Virtual Desktop (AVD) is the ability to spin up Windows 10 instances with Graphics Cards (GPU’s) on the fly. Whereas traditionally it would take significantly longer to deliver GPU graphics to on-premises VDI solutions, due to procurement, logistics, and implementation timelines.

Recently I’ve tested multiple NV series VM’s with Windows 10 Multisession Images, the last test which we have done is with the new promo release is with Standard NV6_Promo (6 vcpus, 56 GiB memory), we have used NVIDIA drivers to test our workload which is available from the Azure VM extension.

I have divided the installation and configuration into 7 steps and they are as follows:

- Select an appropriate GPU optimized Azure virtual machine size

- Install the NVIDIA Graphics Driver in the VM

- Restart the VM

- Verify the driver installation status

- Configure GPU-accelerated app rendering

- Enable the WDDM graphics display driver for Remote Desktop Connections

- Restart the VM

The first step in this process is to deploy a high GPU VM Windows 10 Multi-session instance with the correct graphics cards selected (instance selected).

Step 1: Select an appropriate GPU optimized Azure virtual machine size

Select one of Azure’s NV-series, NVv3-series, NVv4-series, or NcasT4_v3-series VM sizes. These are tailored for app and desktop virtualization and enable most apps and the Windows user interface to be GPU accelerated. The right choice for your host pool depends on a number of factors, including your particular app workloads, desired quality of user experience, and cost. In general, larger and more capable GPUs offer a better user experience at a given user density, while smaller and fractional-GPU sizes allow more fine-grained control over cost and quality. Consider NV series VM retirement when selecting VM, details on NV retirement.

Step 2: Install the NVIDIA Graphics Driver in the VM

Once you have deployed the NV series Azure instance, you will need to install the graphics drivers before being able to use the GPU. In my case I have installed a NV6 VM. Now the next step is to Install supported graphics drivers in your virtual machine

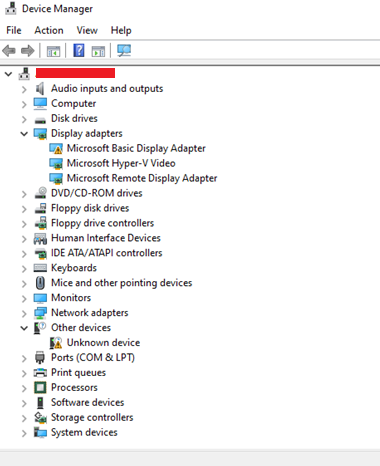

Let’s see how we can do that, first go to the control panel and open the device manager, open the Display adapters and you can see that the High GPU VM display adapter is missing.

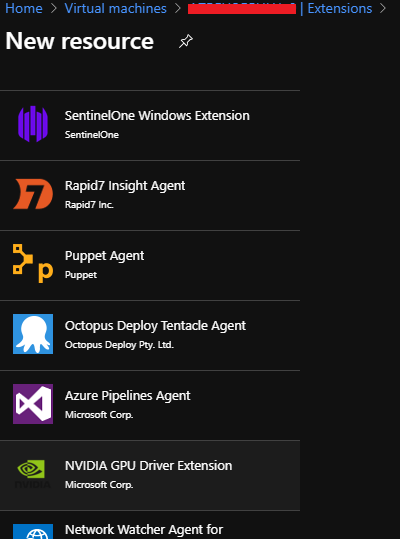

Next login to the Azure Portal, I have decided to install drivers using the Azure VM extension, GRID drivers will automatically be installed for these VM sizes.

Step 3: Restart the VM

Step 4: Verify the driver installation status

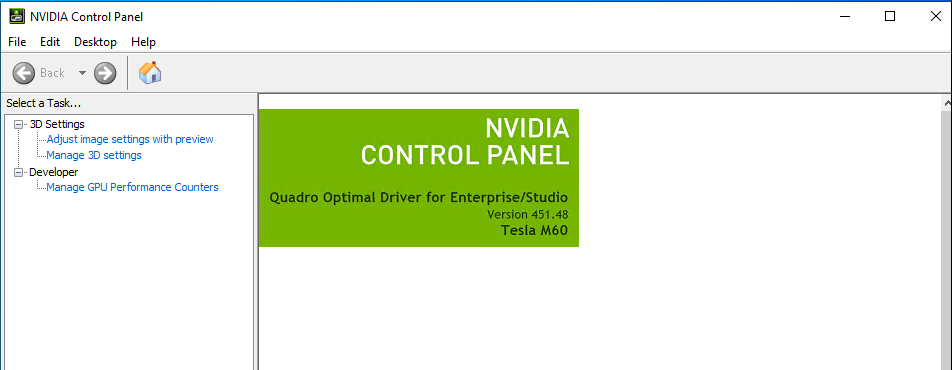

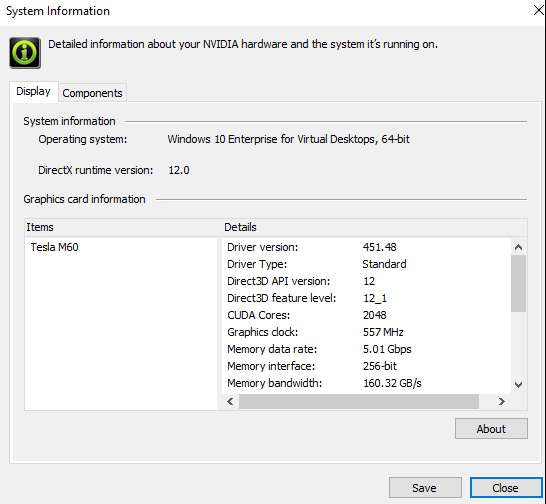

Connect to the desktop of the VM using Azure Virtual Desktop client. Click on NVIDIA icon in the taskbar and check the NVIDIA Setup if it’s correctly installed or not

Check the details info about the NVIDIA hardware

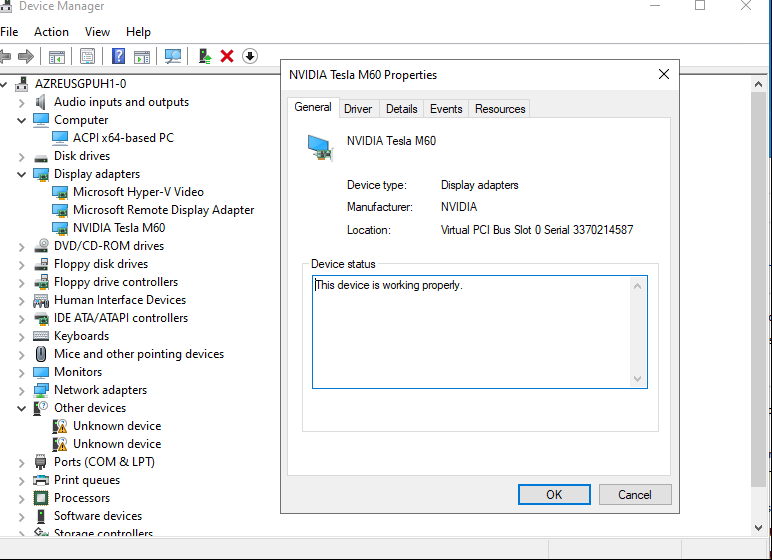

Again, go to the device manager and you can see that it’s already showing in the list of the display drivers.

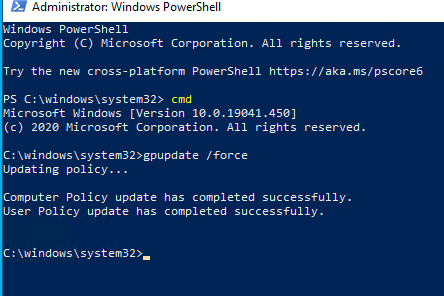

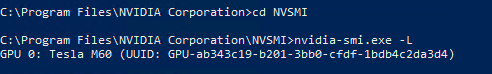

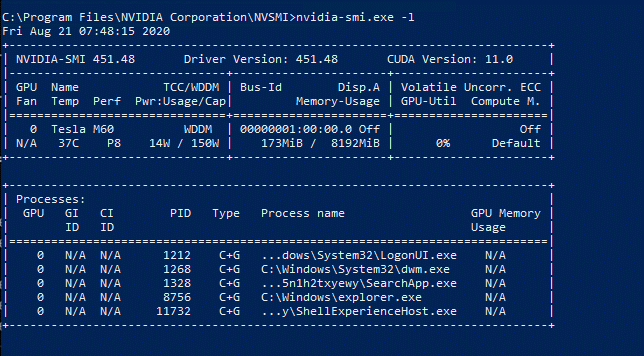

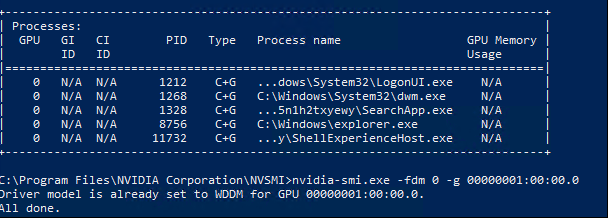

To query the GPU device state, run the nvidia-smi command-line utility installed with the driver.

Open a command prompt and change to the C:\Program Files\NVIDIA Corporation\NVSMI directory.

Run nvidia-smi. If the driver is installed, you will see output similar to the following. The GPU-Util shows 0% unless you are currently running a GPU workload on the VM. Your driver version and GPU details may be different from the ones shown.

Step 5: Configure GPU-accelerated app rendering

By default, apps and desktops running on Windows Server are rendered with the CPU and do not leverage available GPUs for rendering.

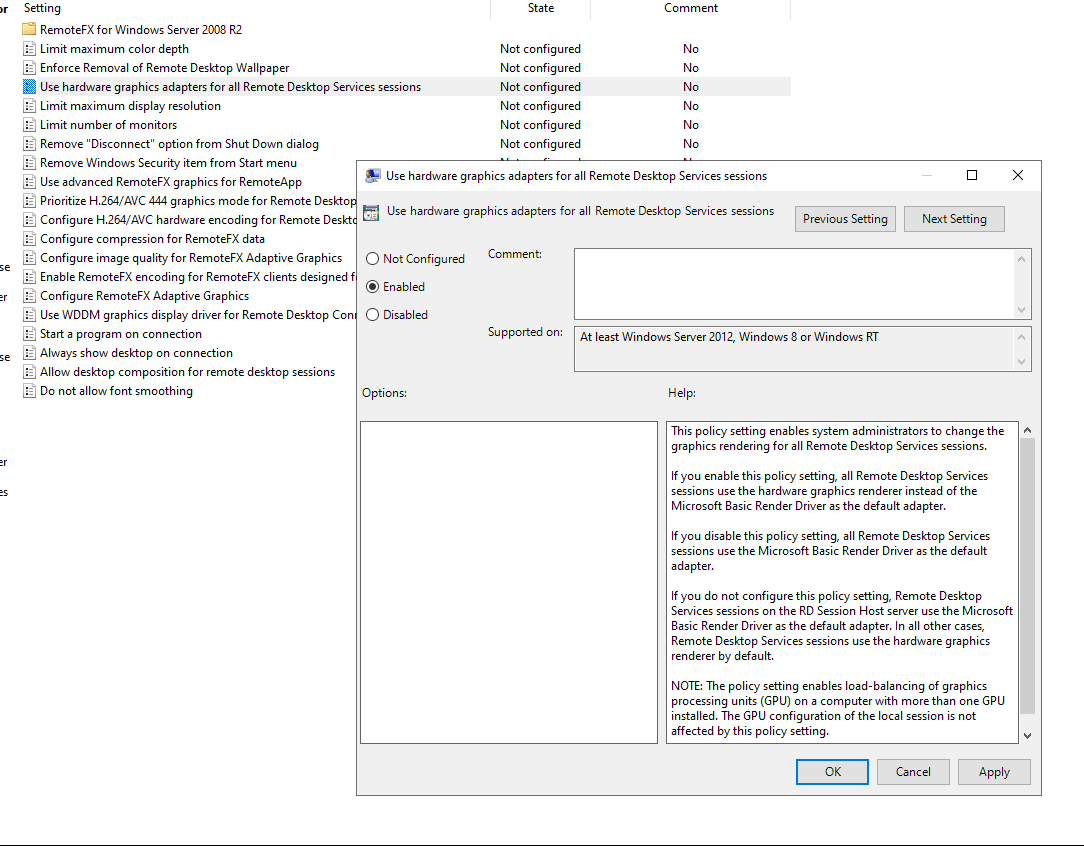

This policy setting enables system administrators to change the graphics rendering for all Remote Desktop Services sessions.

If you enable this policy setting, all Remote Desktop Services sessions use the hardware graphics renderer instead of the Microsoft Basic Render Driver as the default adapter.

If you disable this policy setting, all Remote Desktop Services sessions use the Microsoft Basic Render Driver as the default adapter.

If you do not configure this policy setting, Remote Desktop Services sessions on the RD Session Host server use the Microsoft Basic Render Driver as the default adapter. In all other cases, Remote Desktop Services sessions use the hardware graphics renderer by default.

To Configure Group Policy for the session host to enable GPU-accelerated rendering:

- Connect to the desktop of the VM using an account with local administrator privileges.

- Open the Start menu and type “gpedit.msc” to open the Group Policy Editor.

- Navigate the tree to Computer Configuration > Administrative Templates > Windows Components > Remote Desktop Services > Remote Desktop Session Host > Remote Session Environment.

- Select policy Use hardware graphics adapters for all Remote Desktop Services sessions and set this policy to Enabled to enable GPU rendering in the remote session.

If you want to automate this, the below is the registry key details.

| Registry Hive | HKEY_LOCAL_MACHINE |

| Registry Path | SOFTWARE\Policies\Microsoft\Windows NT\Terminal Services |

| Value Name | bEnumerateHWBeforeSW |

| Value Type | REG_DWORD |

| Enabled Value | 1 |

| Disabled Value | 0 |

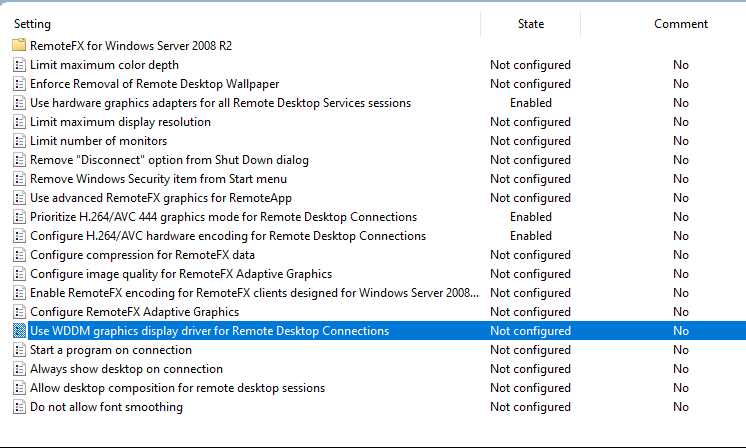

Step 6: Enable the WDDM graphics display driver for Remote Desktop Connections

This policy setting lets you enable WDDM graphics display driver for Remote Desktop Connections.

If you enable or do not configure this policy setting, Remote Desktop Connections will use WDDM graphics display driver.

If you disable this policy setting, Remote Desktop Connections will NOT use WDDM graphics display driver. In this case, the Remote Desktop Connections will use XDDM graphics display driver.

For this change to take effect, you must restart Windows.

If you want to automate this, the below is the registry key details.

| Registry Hive | HKEY_LOCAL_MACHINE |

| Registry Path | SOFTWARE\Policies\Microsoft\Windows NT\Terminal Services |

| Value Name | fEnableWddmDriver |

| Value Type | REG_DWORD |

| Enabled Value | 1 |

| Disabled Value | 0 |

Step 7: Restart the VM

That’s all you are done, let’s perform some testing.

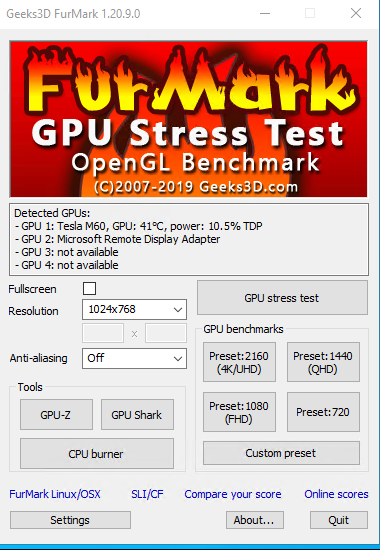

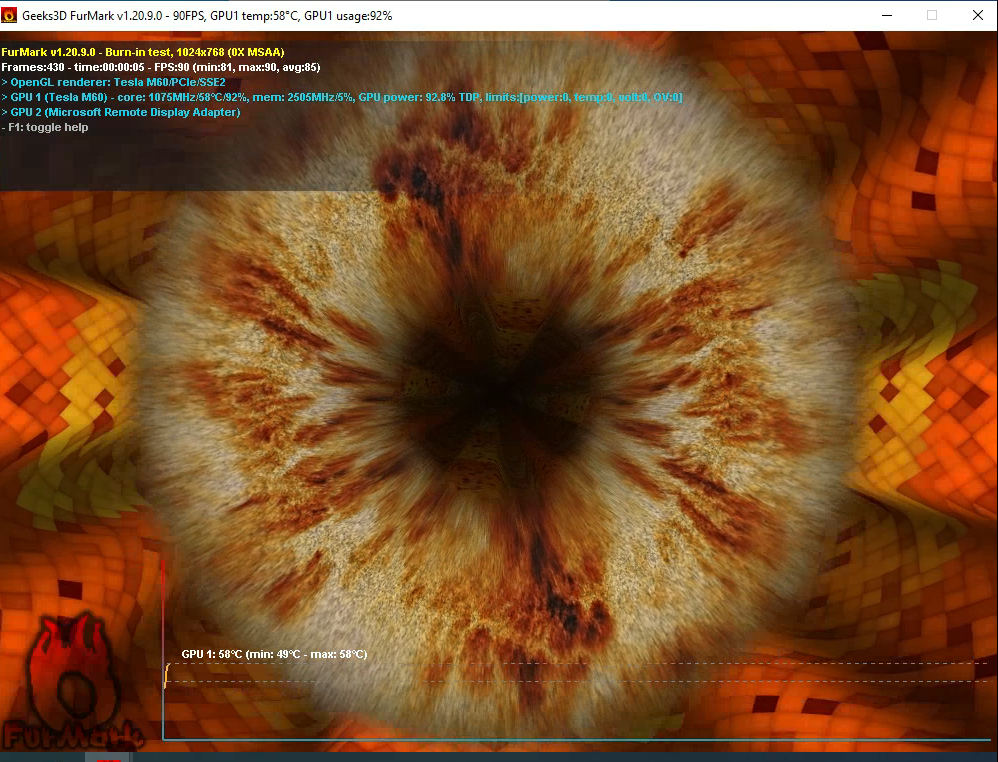

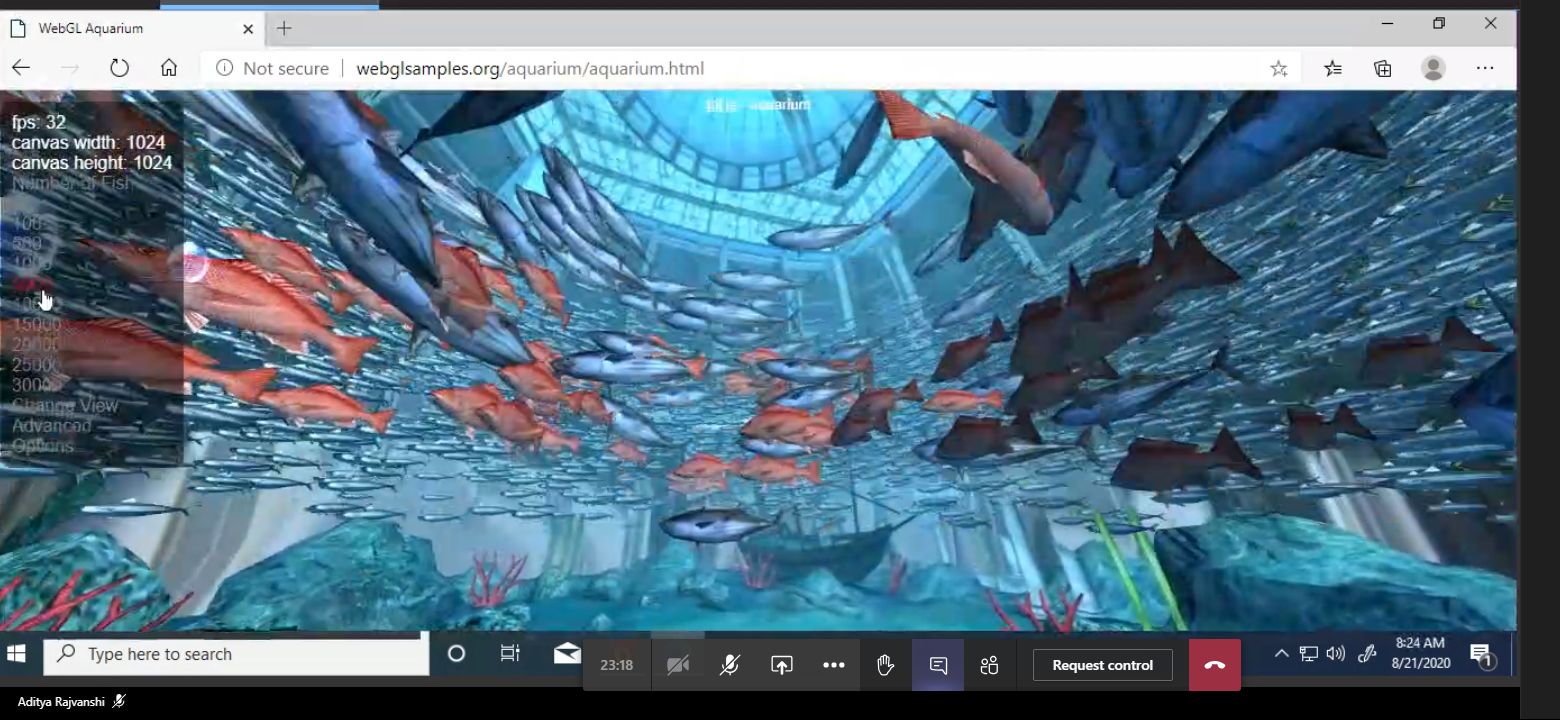

For the testing I have used Geeks3D furMark application to test the performance of the GPU using remote applications (RemoteApp) Affinity as a Remote Application and a asteroid video

Stress Test Results average 57 frames per seconds.

Another test is done.

My testing did show that using GPU’s does provide significant improvements to graphics and user experience for AVD users. However, the need for a GPU is subject to the user-base requirements (graphics Applications) due to cost. Based on my experience I have seen that on-premises Graphics based VM cost is still much cheaper than the AVD cost still now, but, the high GPU VM cost may decrease in the future as the demand for the same is increasing every day in many enterprises and MS is bringing more Azure VM’s to cater this need.

I hope you have liked this post. Thanks for reading this. You have a great day ahead.